The asset and investment management industry is undergoing a major transformation due to increased assets, margin pressure, and demand for faster decision-making. As firms look to build investment management platform capabilities that can keep pace with modern markets, they face a fundamental shift in how data, technology, and operations must work together.

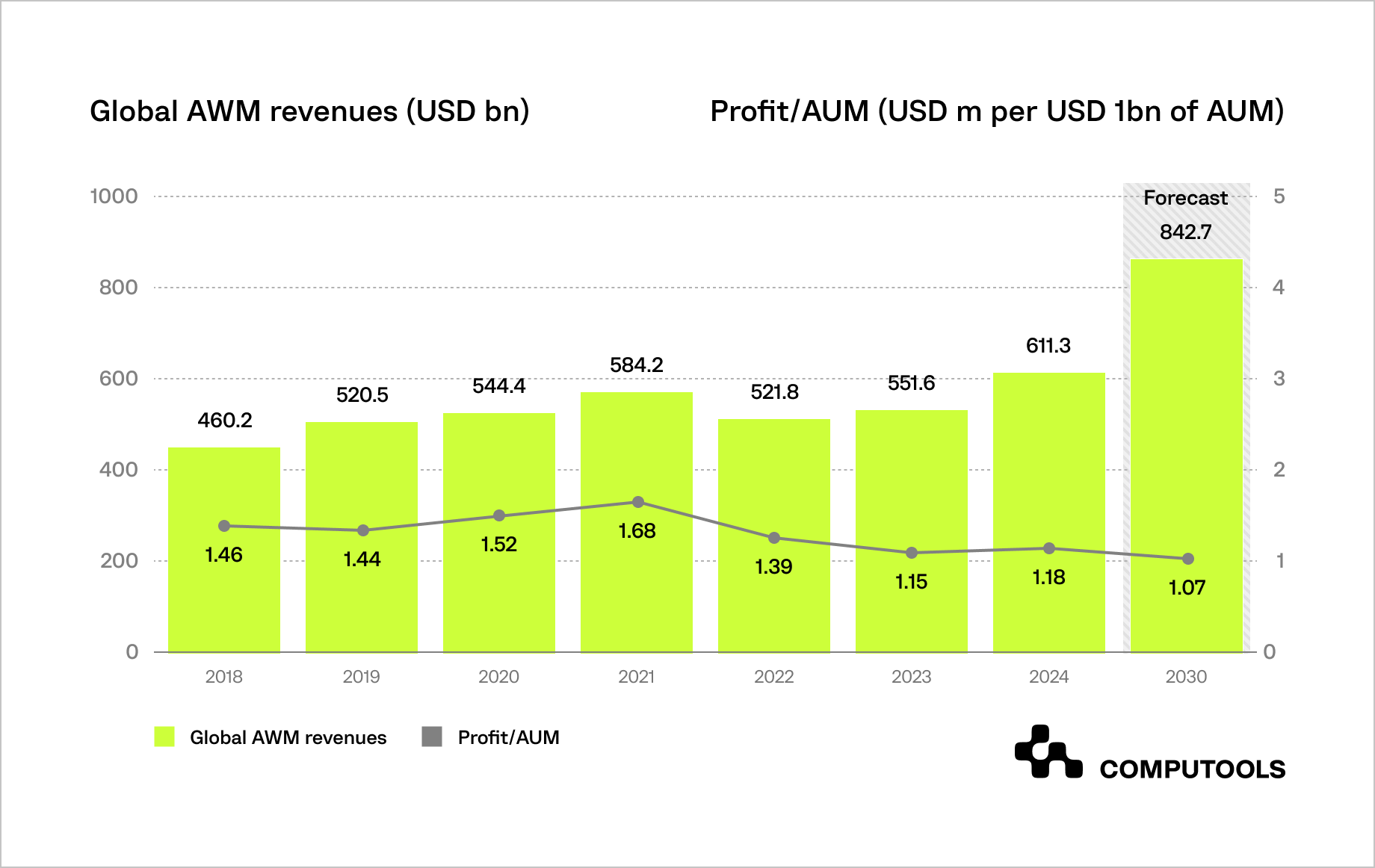

According to PwC, the asset and wealth management industry finds itself in a paradoxical position. On the one hand, global assets under management (AUM) are expected to grow from approximately $139 trillion today to $200 trillion by 2030, creating up to $230 billion in new revenue opportunities over the next five years for firms that can capture this growth.

On the other hand, scale and asset growth no longer guarantee profitability. PwC notes that industry margins are under sustained pressure: profit as a share of AUM has already declined by around 19% since 2018 and is projected to fall by a further 9% by 2030.

In effect, asset managers are responsible for ever-larger pools of capital while generating proportionally less profit from them.

In this environment, traditional models based on fragmented systems, manual processes, and delayed data are becoming financially unsustainable. To protect margins, asset and wealth managers must rethink the efficiency of core processes from analytics to real-time investment decisions.

This is where the quality and speed of market data move from being an IT optimization to a strategic lever for profitability. Investment management platforms powered by real-time market data allow firms to respond faster to market movements, reduce operational overhead, improve risk accuracy, and scale without a proportional increase in costs.

In the sections that follow, we explain how to build an investment management platform with real-time market data in practice, drawing on architectural principles, data-streaming patterns, and real-world design considerations to support a scalable investment platform.

How we built a real-time investment management platform for an Australian financial company

To illustrate how these challenges are addressed in practice, consider our collaboration with an Australian financial firm that manages an online investment and portfolio platform.

The client needed a high-performance system capable of processing large volumes of financial data, supporting multiple asset classes, and delivering accurate risk and income calculations at scale. Performance and data accuracy were critical, as delayed or inconsistent results would directly affect investment decisions. This level of complexity is typical for enterprise-grade investment software development, where calculation speed and reliability cannot be compromised.

We designed and delivered a scalable investment management platform that unified portfolio management, risk analysis, and market data processing in a single system. This solution enabled the client to efficiently handle complex financial data, offer diverse investment opportunities, and deliver a seamless user experience, resulting in a 46% increase in customer base and significant profit growth.

Our team covered the full delivery lifecycle from architecture and solution design to engineering, deployment, and ongoing support. Particular focus was placed on scalability, performance under load, and the stability of financial calculations, which are essential for production-ready fintech software development services.

This delivery experience sets the stage for the next section, which explains how to build investment management platform solutions step by step, based on the architectural and technical principles needed to support real-time data and long-term scalability.

How to build an investment management platform with real-time market data

Let’s review the practical steps for building a fintech investment platform.

Step 1. Define platform scope, users, and latency requirements

The starting point of any investment platform development effort is a precise definition of the decisions the platform must support and the time constraints under which it must operate. This requires clearly identifying who the users are (self-directed investors, advisors, portfolio managers), how responsibilities and access are distributed, and whether the platform operates in an informational, advisory, or execution-enabled model.

These choices directly affect system behavior. User roles determine authorization models and data visibility, while the operating model defines consistency requirements for balances, transactions, and reporting. At the same time, latency expectations must be defined explicitly. “Real-time” should be translated into concrete targets for market data freshness, portfolio valuation updates, and dashboard response times, as these parameters drive architecture, data provider selection, and infrastructure costs.

Asset coverage must also be constrained early. Supporting equities, ETFs, or other instruments introduces different pricing rules, corporate actions, and income logic. Defining a limited, well-scoped asset universe for the initial release allows the core domain model to remain consistent and extensible.

In our Australian investment platform project, these decisions were made upfront. The client required accurate risk and income calculations for local and global equities under strict performance constraints, which meant that user roles, asset scope, and latency targets were formalized before any architectural design began. This eliminated rework later in the project and ensured predictable system behavior as data volumes grew.

Step 2. Establish regulatory, security, and audit requirements

For any production-grade investment management software, regulatory, security, and audit requirements must be treated as design constraints rather than implementation details. These constraints define how data flows through the system, how calculations are stored and reproduced, and how access is controlled at every level.

At this stage, teams must formalize identity and access models, including authentication mechanisms, role-based permissions, and portfolio-level data visibility. Auditability is equally critical: pricing changes, calculation logic updates, user actions, and administrative operations must be logged in a way that is immutable, traceable, and suitable for regulatory review. These requirements influence database design, event logging, and even how calculation engines are versioned over time.

Security requirements should be mapped directly to system components. This includes encryption of sensitive data at rest and in transit, secure key management, session control, and protection against unauthorized access. Importantly, these controls must be enforceable by the platform itself, rather than relying on external processes or documentation.

In our Australian investment platform delivery, auditability and data protection were addressed early, given the platform’s role in risk and income calculations. Logging and access control were embedded into the core services rather than added later, ensuring that every portfolio calculation and data update could be reviewed and reproduced without ambiguity.

By this stage, regulatory, security, and audit requirements must be clearly documented and linked to the architectural components. This approach helps avoid structural issues in the future and ensures compliance does not hinder scalability.

Step 3. Design the market data strategy and ingestion pipeline

Market data must be treated as a core subsystem of the platform. At this stage, teams define the required market data, how it enters the system, how it is normalized, and how failures are handled without compromising calculations or user trust.

The process starts with specifying data coverage and semantics. This includes asset classes, exchanges, trading calendars, time zones, and corporate actions. These parameters determine how prices are interpreted, when instruments are considered active or inactive, and how valuation logic behaves during market closures or exceptional events. Historical depth must also be defined early, as it directly affects storage strategy and analytics capabilities.

The ingestion pipeline should handle continuous streams, outages, and schema changes. Data must be validated, deduplicated, normalized, and mapped to internal identifiers before use in valuation or risk engines. Buffering and backpressure prevent market spikes from degrading performance.

It’s also crucial to define behavior during degraded conditions. The platform must differentiate between fresh, delayed, and unavailable data and clearly communicate this across calculations and interfaces. Clear rules for fallback pricing, cache use, and indicators are necessary when data cannot be fresh.

In our Australian case, these considerations were critical due to the volume of financial data and the need for fast, repeatable calculations. The ingestion pipeline was designed to ensure stable portfolio valuation even when external data sources experienced latency or partial unavailability, preventing inconsistent risk and income results.

A well-designed ingestion layer is the foundation for real-time market data integration, ensuring that downstream components operate on reliable, consistent inputs and that performance remains predictable as data volumes and user activity increase.

Step 4. Define the core domain model: portfolios, positions, valuation, income, risk

Once market data ingestion is defined, the platform needs a clear financial domain model to ensure consistent, auditable results. This step determines how portfolios, transactions, and calculations are represented and how all financial outputs can be reproduced from underlying data.

The foundation is the portfolio ledger. Accounts, portfolios, positions, and all events that modify them—trades, transfers, fees, and corporate actions must be modeled in a traceable way. Valuation, income, and risk calculations should always be derivable from this event history and the corresponding market prices, enabling verification and audit.

Position accounting rules must be explicit, covering lot handling, P&L, fee attribution, and currency conversion. Core events like splits and dividends should be handled deterministically to prevent performance distortions.

Valuation logic needs clear price rules, refresh cycles, and fallback strategies for missing data. Income modeling must specify how dividends, coupons, and fees affect net income, even when taxes are deferred. Risk calculations, exposure, concentration, and scenario sensitivity must use the same data as the valuation.

In our FDA case, defining this model early was essential to support high-volume calculations across local and global equities without inconsistencies. This step turns the platform into a coherent set of financial investment software solutions, with a single source of truth for all portfolio analytics.

Step 5. Choose architecture patterns and component boundaries

After defining the domain model, select architectural patterns that support latency, data volumes, and growth without causing instability. The goal is not maximum scalability but predictable performance in production.

Most investment platforms benefit from a modular architecture with clearly defined component responsibilities. Core domains such as identity and access, portfolio ledger, market data ingestion, valuation and risk calculations, and reporting should be separated by explicit boundaries and data contracts. This separation allows teams to scale or modify individual components without cascading changes across the system.

Event-driven patterns are typically introduced around market data and recalculation flows. Price updates should trigger downstream valuation, and risk updates asynchronously, rather than blocking user-facing requests. At the same time, not all data requires the same consistency guarantees. Balances, transactions, and audit records usually demand strong consistency, while aggregated analytics and visualizations can tolerate controlled eventual consistency.

Caching and state management strategies must be aligned with these decisions. Frequently accessed data, such as latest prices, portfolio snapshots, and summary metrics, should be cached with clear invalidation rules, while authoritative records remain in durable storage. This balance is essential for maintaining performance as user activity and data throughput increase.

Our Australian investment platform’s architecture was driven by the need to process large financial datasets and perform complex calculations under strict performance constraints. Separating ingestion, calculation, and presentation layers allowed scalable growth without sacrificing correctness.

These decisions collectively define the trading and investment platform architecture, establishing how data moves through the system, where calculations occur, and how reliability is maintained as the platform evolves.

Step 6. Implement the portfolio ledger and calculation engines.

With architecture boundaries established, implementation should focus on the components that define correctness and auditability: the portfolio ledger and the calculation layer. Everything exposed to users’ performance metrics, income projections, and risk indicators depends on these foundations being deterministic, traceable, and stable under load.

Implementation should start with an immutable transaction ledger that records every portfolio-changing event in real time. This includes trades, transfers, fees, and corporate actions. The ledger must support reconciliation and state replay to enable reliable reconstruction of portfolio states and historical performance. This design approach is fundamental for any fintech investment platform, where financial results must be explainable and verifiable at any point in time.

Calculation engines should be built as independent, testable components rather than embedded in user request flows. They must use a shared data model for valuation, income, and risk calculations to prevent inconsistencies. Incremental recalculations are key: only affected portfolios should be updated when prices or positions change, not the entire system. Performance limits, such as computation budgets, snapshot caching, and versioned outputs, must be enforced to ensure consistent latency and auditability. The layer should handle market data bursts without causing downstream failures.

In our practice, this separation between the ledger and calculation engines enabled high-volume statistical processing while keeping income and risk outputs consistent as the platform scaled.

Step 7. Build UI/UX for high-density financial information

Now, the focus shifts from system accuracy to how financial information is consumed and acted on. The challenge is organizing complex, data-rich content so users can make decisions confidently without misinterpreting numbers or missing risk signals.

Investment platforms typically expose large volumes of information: prices, performance history, allocations, income, and risk indicators. UI design must prioritize clarity and hierarchy, making it immediately clear what has changed, what matters now, and what actions are available. Market data freshness, valuation timestamps, and calculation assumptions should be visible but unobtrusive, so users always understand the context of the numbers they see.

User flows should focus on decision paths over screens. Dashboard should enable quick performance and exposure assessment, with deeper views for instruments, transactions, and trends without losing context. Notifications should be purposeful and highlight key events, not noise. Visualizations must be consistent, using the same data and logic as backend, especially in edge cases like market closures or delayed data, clearly communicating these states.

In our investment platform project, UI decisions were closely tied to calculation behavior and data latency. By aligning interface logic with backend valuation and risk outputs, the platform delivered stable, interpretable insights even under heavy data loads.

This alignment is especially important for wealth management software, where transparency and trust are central to user adoption.

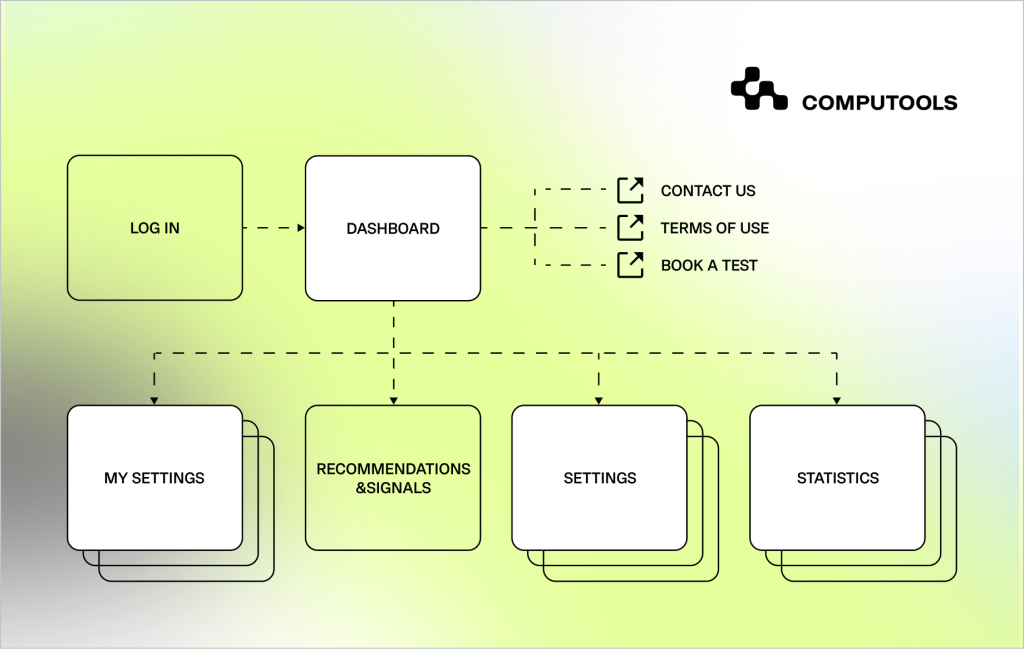

UX structure illustrating how complex investment data is distributed across functional screens rather than concentrated in a single interface.

Customer loyalty in digital banking is shaped by experience quality, trust, personalization, and operational reliability across every touchpoint. Read more in our article Ultimate Guide to Building Customer Loyalty in the Digital Banking Industry.

Step 8. Integrations: banking/brokerage, payments, and identity

Integrations determine whether the platform can operate as a connected financial system rather than an isolated analytics layer. At this stage, external dependencies must be treated as production components with defined failure behavior, observability, and compliance implications.

• Brokerage and trading connectivity: define how orders, executions, positions, and corporate actions are synchronized; enforce idempotency, retries, and reconciliation rules to prevent duplicate execution or portfolio drift.

• Banking and cash movement: integrate deposits, withdrawals, and account verification flows; design explicitly for settlement delays, partial failures, and clear user-visible transaction states.

• Identity, KYC, and onboarding: connect verification and risk-profiling providers; persist decision evidence and timestamps to support audits, re-checks, and regulatory reviews.

• Market data providers operate feeds as monitored subsystems with freshness checks, fallback behavior, and licensing-aware data-exposure rules.

• Notifications and communications: integrate email, SMS, and push services with delivery tracking and rate controls; ensure alerts are actionable and do not expose sensitive information.

• Compliance and reporting tools: support deterministic regulatory exports and internal controls derived from ledger and pricing snapshots.

• Third-party observability: implement structured logs with correlation IDs, error classification, and latency monitoring to detect provider-side changes early.

In a custom investment management system, these integrations form part of the core architecture rather than peripheral extensions. Treating them as first-class components ensures that external failures do not compromise portfolio integrity, calculation correctness, or user trust.

Secure banking integrations are a critical foundation for modern fintech products, especially when building mobile-first investment and financial platforms. Read more in our article How to Build a Fintech Mobile App With Secure Banking Integrations.

Clarify platform maturity compliance needs and performance expectations and engage an expert team to scope and estimate your investment solution.

Step 9. Security and compliance implementation

At this stage, security and compliance move from design intent to enforceable system behavior. The goal is to ensure that every sensitive operation is technically constrained, observable, and auditable in production.

Security must be enforced at runtime across all services. Authentication, session handling, and role-based authorization should be applied consistently on the backend, with portfolio- and account-level access checks performed for every request. This prevents data exposure that could otherwise emerge as the platform evolves.

Data protection should be implemented end-to-end. Financial and personal data must be encrypted in transit and at rest, with key management and rotation controlled. Services should process only the minimum data required for their function, reducing overall attack surface.

Auditability is non-negotiable. The platform must record:

• user and administrative actions,

• pricing updates and data source changes,

• calculation logic versions and execution context.

These logs should be immutable and time-ordered, allowing teams to reconstruct portfolio states and decision paths without ambiguity. This capability is fundamental to secure investment management applications, where regulatory trust depends on explainable, verifiable behavior.

In our project, security and audit controls were embedded directly into the core services that handle income and risk calculations. This ensured that every portfolio update and calculation could be reviewed as the system scaled, without introducing hidden compliance gaps.

Step 10. Testing strategy: correctness, performance, and security

Testing is the stage at which architectural decisions and domain models are validated under real operating conditions. For an investment system, this step confirms that calculations are correct, performance is predictable, and security controls work as intended under load.

Correctness testing focuses on financial determinism. Portfolio valuation, income recognition, and risk calculations must be validated against controlled datasets with known outcomes. Results should be reproducible across environments and over time, even as market data updates, calculation logic evolves, or historical data is reprocessed. This is essential to ensure that a portfolio management platform produces consistent, explainable results rather than approximate or state-dependent outputs.

Performance testing verifies that the platform can sustain expected workloads. Load and stress scenarios should simulate peak market activity, concurrent users, and bursts in pricing updates. These tests reveal bottlenecks in ingestion, calculation, caching, and persistence layers before they surface in production. Latency thresholds defined earlier must be enforced here to prevent silent degradation after launch.

Security testing ensures that protections hold under real conditions. Access controls, session handling, and data isolation must be validated across services, complemented by vulnerability scanning and penetration testing. The objective is to confirm that portfolio data and financial operations remain protected even under abnormal or adversarial scenarios.

In our Australian investment platform project, this phase was critical due to the volume of financial data and the complexity of statistical calculations. Dedicated test suites validated calculation accuracy under load, ensuring that performance optimizations did not compromise financial correctness as the system scaled.

Step 11. Deployment and operations

Deployment shifts a platform from controlled development to continuous operation, requiring reliable performance under live market conditions, variable data, and constant user activity. Release pipelines should be automated and predictable, with CI/CD processes including validation gates, controlled rollouts, and rollbacks to prevent regressions or data issues.

Infrastructure must be clearly separated between environments with immutable builds to maintain consistency. Once live, monitoring is vital for tracking data freshness, ingestion lag, recalculation latency, errors, and IT infrastructure health.

Alerts should be tied to user-impacting thresholds rather than raw metrics, enabling fast response when market feeds degrade, recalculations fall behind, or external dependencies behave unexpectedly. This is especially important for systems built around real-time financial data processing, where delays or silent failures can directly affect portfolio values and decision-making.

In our FDA Operator project, operational readiness was essential, given continuous market data ingestion and heavy computational workloads. Monitoring and alerting were designed around pricing latency and calculation throughput, allowing the team to detect and resolve issues before they affected portfolio analytics or user trust.

Effective deployment and operations ensure that the platform remains stable, observable, and resilient once exposed to live investors and market volatility.

Step 12. Post-launch scaling and roadmap governance

After launch, the primary challenge shifts from delivery to controlled evolution. Scaling an investment platform is not only a technical problem but also a governance problem: unmanaged growth in features, data sources, or asset classes quickly leads to rising costs, operational fragility, and inconsistent user experience.

Scaling decisions should be driven by clear priorities. Adding asset classes, analytics, or regions must be weighed against data complexity, computational load, regulatory constraints, and operational costs. Roadmap governance should keep the core domain model coherent as new features are added, not just a collection of loosely connected features.

Data growth demands attention. Storage, recalculation, and licensing costs often grow faster than users. Teams must set retention policies, aggregation, and recalculation boundaries to ensure predictable performance as the platform scales.

Organizational scalability matters as much as technical scalability. Clear ownership of domains, calculation logic, and data pipelines helps teams evolve the system safely without introducing regressions. Architectural decisions made early should be revisited periodically to confirm they still support business goals rather than constrain them.

This stage reflects a shift in mindset: the goal is no longer to ship a product, but to build investment management platform capabilities that can grow sustainably over time. When governance, architecture, and operations remain aligned, the platform can evolve without sacrificing correctness, performance, or trust.

Low-code platforms are increasingly used to accelerate product delivery and process automation in insurance. Read more in our article Low-Code in the Insurance Industry: What Every Insurer Should Know.

Build investment management platform: strategic advantages and market direction

Building an investment management platform is a strategic decision that defines how an organization operates, scales, and competes over time. As asset volumes grow and margin pressure increases, the ability to make fast, consistent, and well-governed investment decisions becomes a core business capability rather than a supporting function.

A well-designed platform creates this capability by unifying market data, portfolio logic, and analytics into a single operational foundation.

In practice, this translates into a set of tangible advantages that directly affect efficiency, risk control, and long-term adaptability:

1. Faster and more consistent decision-making. Unified pricing, valuation, and risk logic reduce delays between market events and portfolio actions, while preserving consistency across analytics and reporting.

2. Single source of truth for analytics. A platform designed as a financial data analytics platform consolidates market data, portfolio state, and calculation logic into a single source of truth, eliminating discrepancies between dashboards, reports, and regulatory views.

3. Scalable portfolio oversight. Automated ingestion, incremental recalculation, and centralized analytics allow teams to manage growing asset volumes and portfolio complexity without linear increases in operational overhead.

4. Improved governance and audit readiness. Analytical results remain traceable to underlying transactions, prices, and calculation versions, supporting regulatory reviews and internal controls.

5. Long-term adaptability. A platform-based approach makes it easier to introduce new asset classes, analytical models, or reporting requirements without destabilizing existing operations.

Together, these advantages explain why investment platforms are increasingly treated as long-term infrastructure rather than standalone software projects.

Why financial companies trust Computools

Computools focuses on building software for finance, where accuracy, resilience, and compliance directly impact revenue and trust. For over 12 years, we have been delivering complex financial systems that operate reliably under regulatory pressure, high data volumes, and continuous market change.

Our team includes 250+ engineers, and we have completed 400+ projects worldwide, with 20+ delivered specifically for the financial sector, including digital banking platforms, investment and wealth management systems, payment solutions, and risk-driven analytics products.

This experience allows us to approach custom finance software development with a deep understanding of real operational constraints, not just technical requirements.

Computools is ISO 9001 and ISO 27001 certified, compliant with GDPR and HIPAA, and a Microsoft Partner. Our solutions are trusted by global organizations such as Visa, Epson, IBM, Dior, Bombardier, and the British Council.

In finance, one of our cases involved building next-generation digital banking platforms integrated with Visa, enabling measurable business outcomes, including a 12% increase in market share among Gen Z customers for a Caribbean bank.

We help financial organizations modernize legacy systems, automate core operations, strengthen security, and turn fragmented data into actionable insights, so teams can focus on growth rather than operational risk. Looking for a finance-focused tech partner? Write us an e-mail: info@computools.com.

Computools

Software Solutions

Computools is a digital consulting and software development company that delivers innovative solutions to help businesses unlock tomorrow.

“Computools was selected through an RFP process. They were shortlisted and selected from between 5 other suppliers. Computools has worked thoroughly and timely to solve all security issues and launch as agreed. Their expertise is impressive.”